If you've been following my posts, and those of my co-authors, on the problems with the research from the Cornell Food and Brand Lab, there probably won't be very much new here. This post is mainly intended to collect a few problems in other articles that haven't been published yet, and which don't show any particularly new problem.

If you're trying to keep track of all of the problems, I recommend Tim van der Zee's excellent blog post entitled "The Wansink Dossier: An Overview", which he is updating from time to time to included new discoveries (including, hopefully, the ones below).

Wansink, B., & van Ittersum, K. (2007). Portion size me: Downsizing our consumption norms. Journal of the American Dietetic Association, 107, 1103–1106. http://dx.doi.org/10.1016/j.jada.2007.05.019

Wansink, B. (2010). From mindless eating to mindlessly eating better. Physiology & Behavior, 100, 454–463. http://dx.doi.org/10.1016/j.physbeh.2010.05.003

Wansink, B., & van Ittersum, K. (2013). Portion size me: Plate-size induced consumption norms and win-win solutions for reducing food intake and waste. Journal of Experimental Psychology: Applied, 19, 320–332. http://dx.doi.org/10.1037/a0035053

The 2010 article contains about 500 words (in the sections entitled "1.1. Consumption norms are determined by our environment", p. 455, and "1.2. Consumption monitoring — do people really know when they are full?", p. 456) that have been copied verbatim (with only very minor differences) from the sections entitled "Portion Sizes Create Our Consumption Norms" (p. 1104) and "We Underestimate the Calories in Large Portions" (pp. 1104–1105) in the 2007 article.

The 2013 article contains about 300 words (in the section entitled "Consumption Norms", p. 321) that have been copied verbatim (with only very minor differences) from the section entitled "Portion Sizes Create Our Consumption Norms" (p. 1104) in the 2007 article. An indication that this text has been merely copied and pasted can be found in the text "For instance, larger kitchenware in homes all [sic] suggest a consumption norm...", which appears in the 2013 article; in the 2007 article, "larger kitchenware" was one of three items in a list, so that the word "all" was not inappropriate in that case. (Remarkably, the 2013 article has a reference to the 2007 article in the middle of the text that was copied, without attribution, from that earlier article.)

The annotated versions of these articles, showing the apparently duplicated text, can be found here.

Wansink, B. (2003). Profiling nutritional gatekeepers: Three methods for differentiating influential cooks. Food Quality and Preference, 14, 289–297. http://dx.doi.org/10.1016/S0950-3293(02)00088-5

This is one of several studies from the Food and Brand Lab where questionnaires were sent out to different-sized samples of people chosen from different populations, and exactly 770 replies were received in each case, as I mentioned last week here.

I aggregated the reported means and F statistics from Tables 1 through 4 of this article, giving a total of 415 numbers reported to two decimal places. Here is the distribution of the last digits of these numbers:

I think it is reasonable to assume that these final digits ought, in principle, to be uniformly distributed. Following Mosimann, Dahlberg, Davidian, and Krueger (2002), we can calculate the chi-square goodness-of-fit statistic for the counts of each of the 10 different final digits across the four tables:

> chisq.test(c(28, 41, 54, 59, 39, 48, 38, 26, 40, 42))

X-squared = 22.855, df = 9, p-value = 0.006529

It appears that we can reject the null hypothesis that the last digits of these numbers resulted from random processes.

Another surprising finding in this article is that in Table 4, the personality traits "Curious" and "Imaginative" load identically on eight of the ten different categories of cook that are described. The factor loadings for these traits are described in two consecutive lines of the table. It's not clear if this is a copy/paste error, a "retyping from paper" error, or if these numbers are actually correct (which seems like quite a coincidence).

This article, annotated with the above near-duplicate line highlighted, can be found here.

Wansink, B., Cardello, A., & North, J. (2005). Fluid consumption and the potential role of canteen shape in minimizing dehydration. Military Medicine, 170, 871–873. http://dx.doi.org/10.7205/MILMED.170.10.871

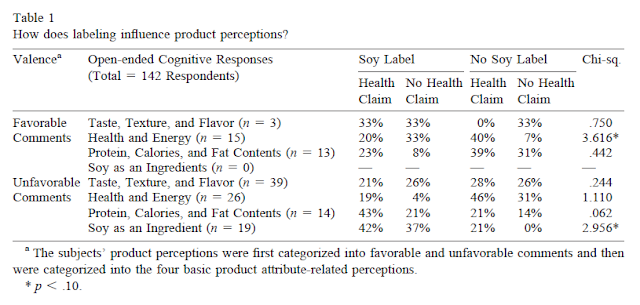

All of the reported test statistics in Table 1 are inconsistent with the means and standard deviations to which they are meant to correspond:

Actual ounces poured: reported F=21.2; possible range 24.39 to 24.65

Estimated ounces poured: reported F=2.3; possible range 2.57 to 2.63

Actual ounces consumed: reported F=16.1; possible range 17.77 to 17.97

Additionally, the degrees of freedom for these F tests (and others in the article) are consistently misreported as (1,49) instead of (1,48).

On p. 873, the following is reported: "A second study involving 37 military police cadets in basic

training at Fort Leonard Wood, Missouri, indicated that there was a similar tendency to pour more water into a short, wide opaque canteen than into a tall, narrow prototype canteen bottle (11.6 vs. 10.2 ounces; F(1,35) = 4.02; p < 0.05)". Here, the degrees of freedom appear to be correctly reported (assuming that each participant used only one type of canteen), but the correct p value for F(1,35) is .053. (This is one of the very rare problems in the Food and Brand Lab's output that statcheck might have been expected to detect. However, it seems that the "=" sign in the reported statistic is a graphic, not an ASCII = character, and so statcheck can't read it.)

An annotated version of this article, showing the apparently erroneous statistics and some other notes that I made as I read the article, can be found here.

If you're trying to keep track of all of the problems, I recommend Tim van der Zee's excellent blog post entitled "The Wansink Dossier: An Overview", which he is updating from time to time to included new discoveries (including, hopefully, the ones below).

Apparent duplication of text without appropriate attribution

Wansink, B., & van Ittersum, K. (2007). Portion size me: Downsizing our consumption norms. Journal of the American Dietetic Association, 107, 1103–1106. http://dx.doi.org/10.1016/j.jada.2007.05.019

Wansink, B. (2010). From mindless eating to mindlessly eating better. Physiology & Behavior, 100, 454–463. http://dx.doi.org/10.1016/j.physbeh.2010.05.003

Wansink, B., & van Ittersum, K. (2013). Portion size me: Plate-size induced consumption norms and win-win solutions for reducing food intake and waste. Journal of Experimental Psychology: Applied, 19, 320–332. http://dx.doi.org/10.1037/a0035053

The 2010 article contains about 500 words (in the sections entitled "1.1. Consumption norms are determined by our environment", p. 455, and "1.2. Consumption monitoring — do people really know when they are full?", p. 456) that have been copied verbatim (with only very minor differences) from the sections entitled "Portion Sizes Create Our Consumption Norms" (p. 1104) and "We Underestimate the Calories in Large Portions" (pp. 1104–1105) in the 2007 article.

The 2013 article contains about 300 words (in the section entitled "Consumption Norms", p. 321) that have been copied verbatim (with only very minor differences) from the section entitled "Portion Sizes Create Our Consumption Norms" (p. 1104) in the 2007 article. An indication that this text has been merely copied and pasted can be found in the text "For instance, larger kitchenware in homes all [sic] suggest a consumption norm...", which appears in the 2013 article; in the 2007 article, "larger kitchenware" was one of three items in a list, so that the word "all" was not inappropriate in that case. (Remarkably, the 2013 article has a reference to the 2007 article in the middle of the text that was copied, without attribution, from that earlier article.)

The annotated versions of these articles, showing the apparently duplicated text, can be found here.

Unusual distributions of terminal digits in data

Wansink, B. (2003). Profiling nutritional gatekeepers: Three methods for differentiating influential cooks. Food Quality and Preference, 14, 289–297. http://dx.doi.org/10.1016/S0950-3293(02)00088-5

This is one of several studies from the Food and Brand Lab where questionnaires were sent out to different-sized samples of people chosen from different populations, and exactly 770 replies were received in each case, as I mentioned last week here.

I aggregated the reported means and F statistics from Tables 1 through 4 of this article, giving a total of 415 numbers reported to two decimal places. Here is the distribution of the last digits of these numbers:

I think it is reasonable to assume that these final digits ought, in principle, to be uniformly distributed. Following Mosimann, Dahlberg, Davidian, and Krueger (2002), we can calculate the chi-square goodness-of-fit statistic for the counts of each of the 10 different final digits across the four tables:

> chisq.test(c(28, 41, 54, 59, 39, 48, 38, 26, 40, 42))

X-squared = 22.855, df = 9, p-value = 0.006529

It appears that we can reject the null hypothesis that the last digits of these numbers resulted from random processes.

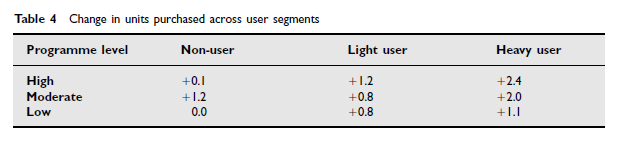

Another surprising finding in this article is that in Table 4, the personality traits "Curious" and "Imaginative" load identically on eight of the ten different categories of cook that are described. The factor loadings for these traits are described in two consecutive lines of the table. It's not clear if this is a copy/paste error, a "retyping from paper" error, or if these numbers are actually correct (which seems like quite a coincidence).

This article, annotated with the above near-duplicate line highlighted, can be found here.

Test statistics inconsistent with reported means and standard deviations

Wansink, B., Cardello, A., & North, J. (2005). Fluid consumption and the potential role of canteen shape in minimizing dehydration. Military Medicine, 170, 871–873. http://dx.doi.org/10.7205/MILMED.170.10.871

All of the reported test statistics in Table 1 are inconsistent with the means and standard deviations to which they are meant to correspond:

Actual ounces poured: reported F=21.2; possible range 24.39 to 24.65

Estimated ounces poured: reported F=2.3; possible range 2.57 to 2.63

Actual ounces consumed: reported F=16.1; possible range 17.77 to 17.97

Additionally, the degrees of freedom for these F tests (and others in the article) are consistently misreported as (1,49) instead of (1,48).

On p. 873, the following is reported: "A second study involving 37 military police cadets in basic

training at Fort Leonard Wood, Missouri, indicated that there was a similar tendency to pour more water into a short, wide opaque canteen than into a tall, narrow prototype canteen bottle (11.6 vs. 10.2 ounces; F(1,35) = 4.02; p < 0.05)". Here, the degrees of freedom appear to be correctly reported (assuming that each participant used only one type of canteen), but the correct p value for F(1,35) is .053. (This is one of the very rare problems in the Food and Brand Lab's output that statcheck might have been expected to detect. However, it seems that the "=" sign in the reported statistic is a graphic, not an ASCII = character, and so statcheck can't read it.)

An annotated version of this article, showing the apparently erroneous statistics and some other notes that I made as I read the article, can be found here.